RESEARCH AND DEVELOPMENT

Spanning several projects and bouts of research, this page documents the many academic and programming endeavors undertaken in the field of interactive media and design.

Screenshot from prototype generative audio game presented at OzCHI 2020.

In early 2020, I began research into methods of improving the responsiveness and interactivity of music systems within game engines and interactive experiences. The following research work attempted to develop a generative system that produced music at runtime, allowing for creators to better define custom scenarios for music transitions and changes. Additionally, the rendering of music at runtime allowed for higher-fidelity tempo changes and transpositions without distortion.

The final product from this first round of research was a 2-D top-down shooter with a heavy focus on music and rhythm. All music was dynamically generated at runtime using a series of predefined loops and phrases, and all music was able to shift in pitch and tempo using a series of transition phrases and tempo gradient maps. Certain limitations, such as the basic MIDI plugin utilized, prevented stereo track panning and custom instruments, ultimately leaving some planned features as speculation to be included in future revisions of the work.

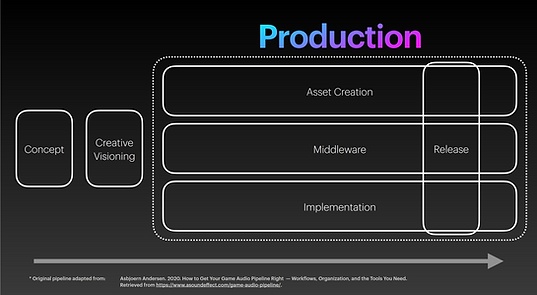

Re-imagined audio production pipeline utilized as a basis for research work.

The research was then compiled and presented at the OzCHI 2020 Conference, where a newly-written paper documenting findings was showcased. In addition to valuable feedback from peers in the industry, I also received an honorable mention for the work shown. The final paper can be accessed at https://doi.org/10.1145/3441000.3441075 or via personal email.

Capstone presentation video showcasing re-imagined work.

Incorporating the feedback on the limited prototype presented at the conference, the work was continued as a part of my capstone project. This second iteration on the work aimed to migrate the engine from a 2-D to a 3-D environment and make various improvements to the code. Additionally, a greater focus was placed on the visuals and spatial audio techniques documented in the original paper, allowing for a more polished overall experience.